By Michael Asten From: The Australian

THE Copenhagen climate change summit closed two weeks ago in confusion, disagreement and, for some, disillusionment. When the political process shows such a lack of unanimity, it is pertinent to ask whether the science behind the politics is as settled as some participants maintain.

Earlier this month (The Australian, December 9) I commented on recently published results showing huge swings in atmospheric carbon dioxide, both up and down, at a time of global cooling 33.6 million years ago.

Paul Pearson and co-authors in a letter (The Weekend Australian, December 11) took exception to my use of their data and claimed I misrepresented their research, a claim I reject since I quoted their data (the veracity of which they do not contest) but offered an alternative hypothesis, namely that the present global warming theory (which was not the subject of their study) is inconsistent with the CO2-temperature variations of a past age.

Some senior scientists, who are adherents of orthodox global warming theory, do not like authors publishing data that can be used to argue against orthodoxy, a point made by unrelated authors with startling clarity in the Climategate leaked emails from the University of East Anglia.

In the scientific method, however, re-examination of data and formulation of alternative hypotheses is the essence of scientific debate. In any case, the debate on the link between atmospheric CO2 and global temperature will continue since it is not dependent on a single result.

Another example is a study by Richard Zeebe and colleagues, published in Nature Geoscience, of a release of CO2 and an increase in temperature 55 million years ago. At this time there was an increase in global temperature of between 5C and 9C, from a temperature regime slightly warmer than today’s (that I will call moderate Earth) to greenhouse temperatures. It can be argued this example may have a message for humanity because the rate of release of CO2 into the atmosphere at the time of this warming was of a similar order to the rate of anthropogenic release today. However, the analogy turns out to be incomplete when the data is compared with present estimates of climate sensitivity to atmospheric CO2, and Zeebe and his colleagues conclude that the large temperature increase cannot be explained by our existing understanding of CO2 temperature linkage. Indeed, they write, “our results imply a fundamental gap in our understanding of the amplitude of global warming associated with large and abrupt climate perturbations. This gap needs to be filled to confidently predict future climate change.”

I argue there are at least two possible hypotheses to explain the data in this study: either the link between atmospheric CO2 content and global temperature increase is significantly greater (that is, more dangerous) than the existing models show or some mechanism other than atmospheric CO2 is a significant or the main factor influencing global temperature.

The first hypothesis is consistent with climate change orthodoxy. Recent writings on climate sensitivity by James Hansen are consistent with it, as was the Intergovernmental Panel on Climate Change in its pre-Copenhagen update, The Copenhagen Diagnosis.

Indeed, the 26 authors of the IPCC update went a step further, and encouraged the 46,000 Copenhagen participants with the warning: “A rapid carbon release, not unlike what humans are causing today, has also occurred at least once in climate history, as sediment data from 55 million years ago show. This `Palaeocene-Eocene thermal maximum’ brought a major global warming of 5C, a detrimental ocean acidification and a mass extinction event. It serves as a stark warning to us today.”

We have to treat such a warning cautiously because, as Pearson and his colleagues pointed out in their letter two weeks ago, “We caution against any attempt to derive a simple narrative linking CO2 and climate on these large time scales. This is because many other factors come into play, including other greenhouse gases, moving continents, shifting ocean currents, dramatic changes in ocean chemistry, vegetation, ice cover, sea level and variations in the Earth’s orbit around the sun.”

Sound science also requires us to consider the second of the above two hypotheses. Otherwise, if we attempt to reconcile Zeebe’s observation by inferring climate sensitivity to CO2 is greater than that used for current models, how do we explain Pearson’s observation of huge swings in atmospheric CO2, both up and down, which appear poorly correlated with temperatures cooling from greenhouse Earth to moderate Earth?

The two geological results discussed both show some discrepancies between observation and model predictions. Such discrepancies do not in any sense reduce the merit of the respective authors’ work; rather they illustrate a healthy and continuing process of scientific discovery.

In addition, unrelated satellite data analyses published in the past two years by physicist David Douglass and distinguished atmospheric scientist John Christy in two journals, International Journal of Climatology and Earth and Environment, provide observational evidence that climate sensitivity associated with CO2 is less than that used in present climate modelling, by a factor of about three.

Thus we have two geological examples and two satellite data studies pointing towards a lesser role of CO2 in global warming. This argument does not discount the reality of global warming during the past century or the potential consequences should it continue at the same rate, but it does suggest we need a broader framework in considering our response. The Copenhagen summit exposed intense political differences in proposals to manage global warming. Scientists are also not unanimous in claiming to understand the complex processes driving climate change and, more important, scientific studies do not unambiguously point to a single solution. Copenhagen will indeed prove to be a historic meeting if it ushers in more open-minded debate.

Michael Asten is a professorial fellow in the school of geosciences at Monash University, Melbourne.

By Kenneth P. Green, For The Calgary Herald

Responses to “Climategate"--the leaked e-mails from Britain’s University of East Anglia and its Climatic Research Unit—remind me of the line “Are your feet wet? Can you see the pyramids? That’s because you’re in denial.”

Climate catastrophists like Al Gore and the UN’s Rajendra Pachauri are downplaying Climategate: it’s only a few intemperate scientists; there’s no real evidence of wrongdoing; now let’s persecute the whistleblower. In Calgary, the latest fellow trying to use the Monty Python “nothing to see here, move along” routine is Prof. David Mayne Reid, who penned a column last week denying the importance of Climategate.

Unfortunately for Reid, old saws won’t work in the Internet age: Climategate has blazed across the Internet, blogosphere, and social networking sites. Even environmentalist and writer George Monbiot has recognized that the public’s perception of climate science will be damaged extensively, calling for one of the Climategate ringleaders to resign.

What’s catastrophic about Climategate is that it reveals a science as broken as Michael Mann’s hockey stick, which despite Reid’s protestations, has been shown to be a misleading chart that erases a 400-year stretch of warm temperatures (called the Medieval Warm Period), and a more recent little ice-age that ended in the mid-1800s. No amount of hand-waving will restore the credibility of climate science while holding onto rubbish like that.

Climategate reveals skulduggery the general public can understand: that a tightly-linked clique of scientists were behaving as crusaders. Their letters reveal they were working in what they repeatedly labelled a “cause” to promote a political agenda.

That’s not science, that’s a crusade. When you cherry-pick, discard, nip, tuck, and tape disparate bits of data into the most alarming portrayal you can in the name of a “cause,” you’re not engaged in science, but in the production of propaganda. And this clique tried to subvert the peer-review process as well. They attempted to prevent others from getting into peer reviewed journals—thus letting them claim skeptic research wasn’t peer-reviewed—a convenient circular (and dishonest) way to discredit skeptics.

Finally, people know that a fish rots from its head. The Climatic Research Unit at the University of East Anglia was considered the top climate research community. It was the source of a vast swath of the information then that was funnelled into the supposedly “authoritative” reports of the United Nations Intergovernmental Panel on Climate Change.

If scientific objectivity is corrupt at the top, there’s every reason to think that the rot spreads through the entire body. And evidence suggests it has. A Russian think-tank recently revealed the climate temperature record compiled by the Climatic Research Unit cherry-picked data from only 25 per cent of Russia’s climate monitoring sites, the sites closest to urban areas, biased by the urban heat island effect. The stations excluded data from 40 per cent of Russia’s total land mass, which is 12.5 per cent of all the Earth’s land mass.

Reid’s indignation about Climategate is beyond ludicrous. “It is wrong,” intones Reid, “to castigate people for things said in private, and often taken out of context.” He equates the response to Climategate with a “lynch mob.” Funny, the professor seems to have highly selective indignation; he is apparently unaware of the unremitting attacks on people skeptical of climate science or policy by climate scientists and politicians.

People skeptical of any aspect of climate change have long been called “deniers,” an odious linkage with Holocaust denial, and various luminaries have called for them to be drowned, jailed, and tried for crimes against humanity. One prominent columnist called skepticism treason against the very Earth itself.

As for indignation about the release of private correspondence, where was Reid’s indignation when Greenpeace, looking for something to spin into an incriminating picture, stole skeptic Chris Horner’s trash? Where was his indignation a few years ago when scientist Steve Schroeder showed a routine letter of mine to another climate scientist (Andrew Dessler), who posted it to the Internet where it was spun into the scurrilous accusation that I was trying to bribe UN scientists? Reid’s indignation is the chutzpah of a man who kills his family then wants pity because he’s an orphan.

The Climategate scandal, like others in biology and medicine erodes the credibility of both the scientists involved, and the institution of scientific research. And it should: it has become evident that there is a lot of rot going on in the body of science, and too little effort made to fix it.

A start could be made. They should start by practicing the scientific method: release all data, and release all assumptions and methods used to process the data at the time of publication. Make it available to researchers (even lay researchers) who are outside the clique so the work can be checked. Had the researchers involved in Climategate done this from the beginning, instead of circling their wagons and refusing to allow outsiders to check their work, they would have taken less hectoring. As a bonus for them, Climategate would never have happened. See post here.

Former IPCC reviewer Kenneth P. Green, has his doctorate in environmental sci ence and engineering and is an Advisor to the Frontier Center for Public Policy, ( http://www.fcpp.org).Green is a Resident Scholar at the Americ an Enterprise Institute.

----------------------------

Best 2009 Climate Related Cartoons

Climate Change Fraud has compiled a list of climate-related cartoons from over the past year into one post. Please feel free to share this post with your friends. And to all a great 2010!

See enlarged image here.

--------------------------

Met Office and CRU bow to public pressure: publish data subset and code

By Steve McIntyre, Climate Audit

The UK Met Office has released a large tranche of station data, together with code.

Only last summer, the Met Office had turned down my FOI request for station data, saying that the provision of station data to me would threaten the course of UK international relations. Apparently, these excuses have somehow ceased to apply.

Last summer the Met Office stated:

“The Met Office received the data information from Professor Jones at the University of East Anglia on the strict understanding by the data providers that this station data must not be publicly released. If any of this information were released, scientists could be reluctant to share information and participate in scientific projects with the public sector organisations based in the UK in future. It would also damage the trust that scientists have in those scientists who happen to be employed in the public sector and could show the Met Office ignored the confidentiality in which the data information was provided.

However, the effective conduct of international relations depends upon maintaining trust and confidence between states and international organisations. This relationship of trust allows for the free and frank exchange of information on the understanding that it will be treated in confidence. If the United Kingdom does not respect such confidences, its ability to protect and promote United Kingdom interests through international relations may be hampered.

The Met Office are not party to information which would allow us to determine which countries and stations data can or cannot be released as records were not kept, or given to the Met Office, therefore we cannot release data where we have no authority to do so.”

Some of the information was provided to Professor Jones on the strict understanding by the data providers that this station data must not be publicly released and it cannot be determined which countries or stations data were given in confidence as records were not kept. The Met Office received the data from Professor Jones on the proviso that it would not be released to any other source and to release it without authority would seriously affect the relationship between the United Kingdom and other Countries and Institutions.

The Met Office announced the release of “station records were produced by the Climatic Research Unit, University of East Anglia, in collaboration with the Met Office Hadley Centre.”

The station data zipfile here is described as a “subset of the full HadCRUT3 record of global temperatures” consisting of:

a network of individual land stations that has been designated by the World Meteorological Organization for use in climate monitoring. The data show monthly average temperature values for over 1,500 land stations.

The stations that we have released are those in the CRUTEM3 database that are also either in the WMO Regional Basic Climatological Network (RBCN) and so freely available without restrictions on re-use; or those for which we have received permission from the national met. service which owns the underlying station data.

I haven’t parsed the data set yet to see what countries are not included in the subset and/or what stations are not included in the subset.

The release was previously reported by Bishop Hill and John Graham-Cumming, who’s already done a preliminary run of the source code made available at the new webpage.

We’ve reported on a previous incident where the Met Office had made untrue statements in order to thwart an FOI request. Is this change of heart an admission of error in at their FOI refusal last summer or has there been a relevant change in their legal situation (as distinct from bad publicity)?

See post and comments here.

Icecap Note: This will be the so called ‘value-added’ data with no corresponding raw data or documentation of adjustments made. It is a token get them off our backs gesture. Rest assure, the community will dig and find the truth, though time will be required.

By Christopher Booker and Richard North, UK Telegraph

No one in the world exercised more influence on the events leading up to the Copenhagen conference on global warming than Dr Rajendra Pachauri, chairman of the UN’s Intergovernmental Panel on Climate Change (IPCC) and mastermind of its latest report in 2007.

Although Dr Pachauri is often presented as a scientist (he was even once described by the BBC as “the world’s top climate scientist"), as a former railway engineer with a PhD in economics he has no qualifications in climate science at all.

What has also almost entirely escaped attention, however, is how Dr Pachauri has established an astonishing worldwide portfolio of business interests with bodies which have been investing billions of dollars in organisations dependent on the IPCC’s policy recommendations.

These outfits include banks, oil and energy companies and investment funds heavily involved in ‘carbon trading’ and ‘sustainable technologies’, which together make up the fastest-growing commodity market in the world, estimated soon to be worth trillions of dollars a year.

Today, in addition to his role as chairman of the IPCC, Dr Pachauri occupies more than a score of such posts, acting as director or adviser to many of the bodies which play a leading role in what has become known as the international ‘climate industry’. It is remarkable how only very recently has the staggering scale of Dr Pachauri’s links to so many of these concerns come to light, inevitably raising questions as to how the world’s leading ‘climate official’ can also be personally involved in so many organisations which stand to benefit from the IPCC’s recommendations.

The issue of Dr Pachauri’s potential conflict of interest was first publicly raised last Tuesday when, after giving a lecture at Copenhagen University, he was handed a letter by two eminent ‘climate sceptics’. One was the Stephen Fielding, the Australian Senator who sparked the revolt which recently led to the defeat of his government’s ‘cap and trade scheme’. The other, from Britain, was Lord Monckton, a longtime critic of the IPCC’s science, who has recently played a key part in stiffening opposition to a cap and trade bill in the US Senate.

Their open letter first challenged the scientific honesty of a graph prominently used in the IPCC’s 2007 report, and shown again by Pachauri in his lecture, demanding that he should withdraw it. But they went on to question why the report had not declared Pachauri’s personal interest in so many organisations which seemingly stood to profit from its findings.

The letter, which included information first disclosed in last week’s Sunday Telegraph, was circulated to all the 192 national conference delegations, calling on them to dismiss Dr Pachauri as IPCC chairman because of recent revelations of his conflicting interests. The original power base from which Dr Pachauri has built up his worldwide network of influence over the past decade is the Delhi-based Tata Energy Research Institute, of which he became director in 1981 and director-general in 2001. Now renamed The Energy Research Institute, TERI was set up in 1974 by India’s largest privately-owned business empire, the Tata Group, with interests ranging from steel, cars and energy to chemicals, telecommunications and insurance (and now best-known in the UK as the owner of Jaguar, Land Rover, Tetley Tea and Corus, Britain’s largest steel company).

Although TERI has extended its sponsorship since the name change, the two concerns are still closely linked. In India, Tata exercises enormous political power, shown not least in the way it has managed to displace hundreds of thousands of poor tribal villagers in the eastern states of Orissa and Jarkhand to make way for large-scale iron mining and steelmaking projects.

Initially, when Dr Pachauri took over the running of TERI in the 1980s, his interests centred on the oil and coal industries, which may now seem odd for a man who has since become best known for his opposition to fossil fuels. He was, for instance, a director until 2003 of India Oil, the country’s largest commercial enterprise, and until this year remained as a director of the National Thermal Power Generating Corporation, its largest electricity producer.

In 2005, he set up GloriOil, a Texas firm specialising in technology which allows the last remaining reserves to be extracted from oilfields otherwise at the end of their useful life. However, since Pachauri became a vice-chairman of the IPCC in 1997, TERI has vastly expanded its interest in every kind of renewable or sustainable technology, in many of which the various divisions of the Tata Group have also become heavily involved, such as its project to invest $1.5 billion (930 million pounds) in vast wind farms.

Dr Pachauri’s TERI empire has also extended worldwide, with branches in the US, the EU and several countries in Asia. TERI Europe, based in London, of which he is a trustee (along with Sir John Houghton, one of the key players in the early days of the IPCC and formerly head of the UK Met Office) is currently running a project on bio-energy, financed by the EU. Another project, co-financed by our own Department of Environment, Food and Rural Affairs and the German insurance firm Munich Re, is studying how India’s insurance industry, including Tata, can benefit from exploiting the supposed risks of exposure to climate change. Quite why Defra and UK taxpayers should fund a project to increase the profits of Indian insurance firms is not explained.

Even odder is the role of TERI’s Washington-based North American offshoot, a non-profit organisation, of which Dr Pachauri is president. Conveniently sited on Pennsylvania Avenue, midway between the White House and the Capitol, this body unashamedly sets out its stall as a lobbying organisation, to “sensitise decision-makers in North America to developing countries’ concerns about energy and the environment”.

TERI-NA is funded by a galaxy of official and corporate sponsors, including four branches of the UN bureaucracy; four US government agencies; oil giants such as Amoco; two of the leading US defence contractors; Monsanto, the world’s largest GM producer; the WWF (the environmentalist campaigning group which derives much of its own funding from the EU) and two world leaders in the international ‘carbon market’, between them managing more than $1 trillion (620 billion pounds) worth of assets.

All of this is doubtless useful to the interests of Tata back in India, which is heavily involved not just in bio-energy, renewables and insurance but also in ‘carbon trading’, the worldwide market in buying and selling the right to emit CO2. Much of this is administered at a profit by the UN under the Clean Development Mechanism (CDM) set up under the Kyoto Protocol, which the Copenhagen treaty was designed to replace with an even more lucrative successor.

Under the CDM, firms and consumers in the developed world pay for the right to exceed their ‘carbon limits’ by buying certificates from those firms in countries such as India and China which rack up ‘carbon credits’ for every renewable energy source they develop - or by showing that they have in some way reduced their own ‘carbon emissions’.

It is one of these deals, reported in last week’s Sunday Telegraph, which is enabling Tata to transfer three million tonnes of steel production from its Corus plant in Redcar to a new plant in Orissa, thus gaining a potential 1.2 billion pounds in ‘carbon credits’ (and putting 1,700 people on Teesside out of work).

More than three-quarters of the world ‘carbon’ market benefits India and China in this way. India alone has 1,455 CDM projects in operation, worth $33 billion (20 billion pounds), many of them facilitated by Tata - and it is perhaps unsurprising that Dr Pachauri also serves on the advisory board of the Chicago Climate Exchange, the largest and most lucrative carbon-trading exchange in the world, which was also assisted by TERI in setting up India’s own carbon exchange.

But this is peanuts compared to the numerous other posts to which Dr Pachauri has been appointed in the years since the UN chose him to become the world’s top ‘climate-change official’. In 2007, for instance, he was appointed to the advisory board of Siderian, a San Francisco-based venture capital firm specialising in ‘sustainable technologies’, where he was expected to provide the Fund with ‘access, standing and industrial exposure at the highest level’.

In 2008 he was made an adviser on renewable and sustainable energy to the Credit Suisse bank and the Rockefeller Foundation. He joined the board of the Nordic Glitnir Bank, as it launched its Sustainable Future Fund, looking to raise funding of 4 billion pounds. He became chairman of the Indochina Sustainable Infrastructure Fund, whose CEO was confident it could soon raise 100 billion pounds.

In the same year he became a director of the International Risk Governance Council in Geneva, set up by EDF and E.On, two of Europe’s largest electricity firms, to promote ‘bio-energy’. This year Dr Pachauri joined the New York investment fund Pegasus as a ‘strategic adviser’, and was made chairman of the advisory board to the Asian Development Bank, strongly supportive of CDM trading, whose CEO warned that failure to agree a treaty at Copenhagen would lead to a collapse of the carbon market.

The list of posts now held by Dr Pachauri as a result of his new-found world status goes on and on. He has become head of Yale University’s Climate and Energy Institute, which enjoys millions of dollars of US state and corporate funding. He is on the climate change advisory board of Deutsche Bank. He is Director of the Japanese Institute for Global Environmental Strategies and was until recently an adviser to Toyota Motors. Recalling his origins as a railway engineer, he is even a policy adviser to SNCF, France’s state-owned railway company.

Meanwhile, back home in India, he serves on an array of influential government bodies, including the Economic Advisory Committee to the prime minister, holds various academic posts and has somehow found time in his busy life to publish 22 books.

Dr Pachauri never shrinks from giving the world frank advice on all matters relating to the menace of global warming. The latest edition of TERI News quotes him as telling the US Environmental Protection Agency that it must go ahead with regulating US carbon emissions without waiting for Congress to pass its cap and trade bill.

It reports how, in the days before Copenhagen, he called on the developing nations which had been historically responsible for the global warming crisis to make ‘concrete commitments’ to aiding developing countries such as India with funding and technology - while insisting that India could not agree to binding emissions targets. India, he said, must bargain for large-scale subsidies from the West for developing solar power, and Western funds must be made available for geo-engineering projects to suck CO2 out of the atmosphere.

As a vegetarian Hindu, Dr Pachauri repeated his call for the world to eat less meat to cut down on methane emissions (as usual he made no mention of what was to be done about India’s 400 million sacred cows). He further called for a ban on serving ice in restaurants and for meters to be fitted to all hotel rooms, so that guests could be charged a carbon tax on their use of heating and air-conditioning.

One subject the talkative Dr Pachauri remains silent on, however, is how much money he is paid for all these important posts, which must run into millions of dollars. Not one of the bodies for which he works publishes his salary or fees, and this notably includes the UN, which refuses to reveal how much we all pay him as one of its most senior officials.

As for TERI itself, Dr Pachauri’s main job for nearly 30 years, it is so coy about money that it does not even publish its accounts - the financial statement amounts to two income and expenditure pie charts which contain no detailed figures. Dr Pachauri is equally coy about TERI’s links with Tata, the company which set it up in the 1970s and whose name it continued to bear until 2002, when it was changed to just The Energy Research Institute. A spokesman at the time said ‘we have not severed our past relationship with the Tatas, the change is only for convenience’.

But the real question mark over TERI’s director-general remains over the relationship between his highly lucrative commercial jobs and his role as chairman of the IPCC. TERI have, for example, become a preferred bidder for Kuwaiti contracts to clean up the mess left by Saddam Hussein in their oilfields in 1991. The $3 billion (1.9 billion pounds) cost of the contracts has been provided by the UN. If successful, this would be tenth time TERI have benefited from a contract financed by the UN.

Certainly no one values the services of TERI more than the EU, which has included Dr Pachauri’s institute as a partner in no fewer than 12 projects designed to assist in devising the EU’s policies on mitigating the effects of the global warming predicted by the IPCC. But whether those 1,700 Corus workers on Teesside will next month be so happy to lose their jobs to India, thanks to the workings of that international ‘carbon market’ about which Dr Pachauri is so enthusiastic, is quite another matter. See the post and more here.

NOTE: See Open Letter to Pachauri by Lord Monckton and Senator Fielding (Australia) here on SPPI. SPPI also notes: SPPI’s Lord Monckton is currently scheduled to appear on Fox News on Monday sometime between 4 to 5pm Eastern, on Neil Cavuto program. Neil’s away on holiday, so this week Brian Sullivan is the anchor. Likely Monckton will colorfully recount the whole Copenhagen experience.

Note: See summary of recent findings on blatant data manipulation and irregularities here.

By Joseph D’Aleo in Pajamas Media

As James Delingpole, in the Telegraph, noted Wednesday:

“Climategate just got much, much bigger. And all thanks to the Russians who, with perfect timing, dropped this bombshell just as the world’s leaders are gathering in Copenhagen to discuss ways of carbon-taxing us all back to the dark ages. On Tuesday, we heard via the Ria Novosti agency that the Moscow-based Institute of Economic Analysis (IEA) issued a report claiming that the Hadley Center for Climate Change had probably tampered with Russian-climate data:

The IEA believes that Russian meteorological-station data did not substantiate the anthropogenic global-warming theory. Analysts say Russian meteorological stations cover most of the country’s territory, and that the Hadley Center had used data submitted by only 25% of such stations in its reports. Over 40% of Russian territory was not included in global-temperature calculations for some other reasons, rather than the lack of meteorological stations and observations.

The data of stations located in areas not listed in the Hadley Climate Research Unit Temperature UK (HadCRUT) survey often does not show any substantial warming in the late 20th century and the early 21st century.

The HadCRUT database includes specific stations providing incomplete data and highlighting the global-warming process, rather than stations facilitating uninterrupted observations. They concluded climatologists use the incomplete findings of meteorological stations far more often than those providing complete observations and data from stations located in large populated centers that are influenced by the urban-warming effect more frequently than the correct data of remote stations.

Paint-by-Numbers Science

Imagine a paint-by-numbers kit with 12 colors of the spectrum - from purple and blue, green to yellow, orange and red, each numbered. When you finish coloring the areas in the coloring book or canvas with the appropriate color number, you have a color painting for the fridge.

Suppose you got a version with only the number 1 and 2 colors marked. You have the colors - but what you end up with is a patchwork of two colors on a white background, with lines defining other areas. You could guess about the other colors, but the end result may not be what the original creator had in mind.

Believe it or not, this very simple analogy applies to the claims of global warming.

In the climate change map of the world, where the Earth is depicted as flat (and skeptics are called flat-earthers, naturally) and with a latitude/longitude grid as the “to be colored” areas, the purples and blues represent cold temperatures and yellows, oranges, and reds represent warm. It appears the stations chosen in Russia were those that were likely to be warmer - reds and oranges. Further, with no information on what color to use for the areas where stations were ignored, guesses were made to fill in the empty grid boxes by extrapolating only from the warmer subset of stations.

More reds and oranges.

Partners in Crime: NOAA and NASA Complicit

Russia was not the only area that underwent cherry-picking, nor is CRU the only cherry-picker. NOAA’s global climate database (GHCN) - according to CRU’s Phil Jones in the following email - mirrors the CRU data under attack:

Almost all the data we have in the CRU archive is exactly the same as in the Global Historical Climatology Network (GHCN) archive used by the NOAA National Climatic Data Center.

And NASA uses the GHCN, applying their own adjustments, as we reported in this story:

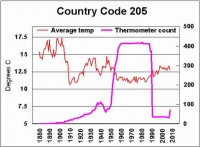

Perhaps one of the biggest issues with the global data is station dropout after 1990. Over 6000 stations were active in the mid-1990s. Just over 1000 are in use today. The stations that dropped out were mainly rural and at higher latitudes and altitudes - all cooler stations. This alone should account for part of the assessed warming. China had 100 stations in 1950, over 400 in 1960, then only 25 by 1990. This changing distribution makes any assessment of accurate change impossible.

We now know that the Russian station count dropped from 476 to 121, meaning “over 40% of Russian territory was not properly included in global-temperature calculations for some other reasons, rather than the lack of meteorological stations and observations.”

In the IEA report, there is a chart showing CRU’s selective use of 25% of the Russian data created 0.64C more warming than was exhibited by using 100% of the raw data. Given the huge area Russia represents (11.5% of global land surface area), this significantly affects global land temperatures.

We know from the maps that NASA produces - produced using NOAA GHCN data - that Canada is largely missing. As is Greenland, the Arctic, much of Africa. Brazil. And parts of Australia. (See this post.)

To fill in these large holes, data was extrapolated from great distances away. Often the data came from lower latitude, lower elevation, and higher population centers. In addition to station dropout, the number of missing months increased by as much as tenfold in many of the remaining areas. This required filling in of data from surrounding stations, again sometimes considerable distances away from the missing location. Another opportunity for error, if not mischief.

These gaps allowed the data centers to extrapolate the data from the warm spots to the missing data grids, or to blend the warm stations with the fewer remaining cooler ones.

More reds and oranges.

There was indeed a global warming period from 1979 to 1998, thanks to the natural cycles of the oceans and sun - which had produced a similar warming from around 1920 to 1940, and a cooling from the 1940s to the late 1970s. In the adjustments made by all the data centers, they cooled off the 1930s and 1940s warm blip by adjusting land and ocean temperatures down, and elevated the late 20th century and this decade.

Even with that, a cooling trend has been observed since 2001.

They applied no correction for urban growth or spread, which can produce an artificial but very localized warming (see recent Georgia Tech release here). And in the United States, Anthony Watts - in a volunteer survey of over 1000 of the 1221 instrument stations - had found 89% were poorly or very poorly sited, using NOAA’s own criteria. This resulted in a warm bias of over 1 degree C (earlier analysis here).

A few preliminary surveys in other parts of the world indicate the U.S. is not alone.

Satellites have measured global temperatures since 1979, and have shown warming. Originally the satellites were in close agreement with the data centers’ global numbers, but gradually the data centers have diverged and are now approaching 0.5 degrees Celsius (see this post).

Given all this manipulation and cherry-picking, you should ignore the press releases that will undoubtedly be coming from NOAA (when they return from snowy, cold Copenhagen), NASA, and Hadley about how this has been among the warmest years, and how the last decade was the warmest on record.

There indeed has been man-made global warming - but it’s from the paint-by-numbers men and women at NOAA, NASA, and Hadley… and now, just like that, it’s gone. Read more here.

---------------------

Climategate: Something’s Rotten in Denmark - and East Anglia, Asheville, and New York City

By Joseph D’Aleo, Pajamas Media exclusive

The familiar phrase was spoken by Marcellus in Shakespeare’s Hamlet - first performed around 1600, at the start of the Little Ice Age. “Something is rotten in the state of Denmark” is the exact quote. It recognizes that fish rots from the head down, and it means that all is not well at the top of the political hierarchy. Shakespeare proved to be Nostradamus. Four centuries later - at the start of what could be a new Little Ice Age - the rotting fish is Copenhagen.

The smell in the air may be from the leftover caviar at the banquet tables, or perhaps from the exhaust of 140 private jets and 1200 limousines commissioned by the attendees when they discovered there was to be no global warming evident in Copenhagen. (In fact, the cold will deepen and give way to snow before they leave, an extension of the Gore Effect.) Note: already has come.

But the metaphorical stench comes from the well-financed bad science and bad policy, promulgated by the UN, and the complicity of the so-called world leaders, thinking of themselves as modern-day King Canutes (the Viking king of Denmark, England, and Norway - who ironically ruled during the Medieval Warm Period this very group has tried to deny). His flatterers thought his powers “so great, he could command the tides of the sea to go back.”

Unlike the warmists and the compliant media, Canute knew otherwise, and indeed the tide kept rising. Nature will do what nature always did - change.

It’s the data, stupid

If we torture the data long enough, it will confess. (Ronald Coase, Nobel Prize for Economic Sciences, 1991)

The Climategate whistleblower proved what those of us dealing with data for decades know to be the case - namely, data was being manipulated. The IPCC and their supported scientists have worked to remove the pesky Medieval Warm Period, the Little Ice Age, and the period emailer Tom Wigley referred to as the “warm 1940s blip,” and to pump up the recent warm cycle.

Attention has focused on the emails dealing with Michael Mann’s hockey stick and other proxy attempts, most notably those of Keith Briffa. Briffa was conflicted in this whole process, noting he “[tried] hard to balance the needs of the IPCC with science, which were not always the same,” and that he knew “...there is pressure to present a nice tidy story as regards ‘apparent unprecedented warming in a thousand years or more in the proxy data.’”

As Steve McIntyre has blogged:

“Much recent attention has been paid to the email about the “trick” and the effort to “hide the decline.” Climate scientists have complained that this email has been taken “out of context.” In this case, I’m not sure that it’s in their interests that this email be placed in context because the context leads right back to the role of IPCC itself in “hiding the decline” in the Briffa reconstruction.”

In the area of data, I am more concerned about the coordinated effort to manipulate instrumental data (that was appended onto the proxy data truncated in 1960 when the trees showed a decline - the so called “divergence problem") to produce an exaggerated warming that would point to man’s influence. I will be the first to admit that man does have some climate effect - but the effect is localized. Up to half the warming since 1900 is due to land use changes and urbanization, confirmed most recently by Georgia Tech’s Brian Stone (2009), Anthony Watts (2009), Roger Pielke Sr., and many others. The rest of the warming is also man-made - but the men are at the CRU, at NOAA’s NCDC, and NASAs GISS, the grant-fed universities and computer labs.

Programmer Ian “Harry” Harris, in the Harry_Read_Me.txt file, commented about:

“[The] hopeless state of their (CRU) data base. No uniform data integrity, it’s just a catalogue of issues that continues to grow as they’re found...I am very sorry to report that the rest of the databases seem to be in nearly as poor a state as Australia was. There are hundreds if not thousands of pairs of dummy stations, one with no WMO and one with, usually overlapping and with the same station name and very similar coordinates. I know it could be old and new stations, but why such large overlaps if that’s the case? Aarrggghhh! There truly is no end in sight.

This whole project is SUCH A MESS. No wonder I needed therapy!!

I am seriously close to giving up, again. The history of this is so complex that I can’t get far enough into it before by head hurts and I have to stop. Each parameter has a tortuous history of manual and semi-automated interventions that I simply cannot just go back to early versions and run the updateprog. I could be throwing away all kinds of corrections - to lat/lons, to WMOs (yes!), and more. So what the hell can I do about all these duplicate stations?

Climategate has sparked a flurry of examinations of the global data sets - not only at CRU, but in nations worldwide and at the global data centers at NOAA and NASA. Though the Hadley Centre implied their data was in agreement with other data sets and thus trustworthy, the truth is other data centers are complicit in the data manipulation fraud.

....

When you hear press releases from NOAA, NASA, or Hadley claiming a month, year, or decade ranks among the warmest ever recorded, keep in mind: they have tortured the data, and it has confessed. See much more in the rest of this 3 page post here. See Roger Pielke Sr’s much appreciated comments on this post here.

By Joseph D’Aleo, Pajamas Media exclusive

The familiar phrase was spoken by Marcellus in Shakespeare’s Hamlet - first performed around 1600, at the start of the Little Ice Age. “Something is rotten in the state of Denmark” is the exact quote. It recognizes that fish rots from the head down, and it means that all is not well at the top of the political hierarchy. Shakespeare proved to be Nostradamus. Four centuries later - at the start of what could be a new Little Ice Age - the rotting fish is Copenhagen.

The smell in the air may be from the leftover caviar at the banquet tables, or perhaps from the exhaust of 140 private jets and 1200 limousines commissioned by the attendees when they discovered there was to be no global warming evident in Copenhagen. (In fact, the cold will deepen and give way to snow before they leave, an extension of the Gore Effect.) Note: already has come.

But the metaphorical stench comes from the well-financed bad science and bad policy, promulgated by the UN, and the complicity of the so-called world leaders, thinking of themselves as modern-day King Canutes (the Viking king of Denmark, England, and Norway - who ironically ruled during the Medieval Warm Period this very group has tried to deny). His flatterers thought his powers “so great, he could command the tides of the sea to go back.”

Unlike the warmists and the compliant media, Canute knew otherwise, and indeed the tide kept rising. Nature will do what nature always did - change.

It’s the data, stupid

If we torture the data long enough, it will confess. (Ronald Coase, Nobel Prize for Economic Sciences, 1991)

The Climategate whistleblower proved what those of us dealing with data for decades know to be the case - namely, data was being manipulated. The IPCC and their supported scientists have worked to remove the pesky Medieval Warm Period, the Little Ice Age, and the period emailer Tom Wigley referred to as the “warm 1940s blip,” and to pump up the recent warm cycle.

Attention has focused on the emails dealing with Michael Mann’s hockey stick and other proxy attempts, most notably those of Keith Briffa. Briffa was conflicted in this whole process, noting he “[tried] hard to balance the needs of the IPCC with science, which were not always the same,” and that he knew “...there is pressure to present a nice tidy story as regards ‘apparent unprecedented warming in a thousand years or more in the proxy data.’”

As Steve McIntyre has blogged:

“Much recent attention has been paid to the email about the “trick” and the effort to “hide the decline.” Climate scientists have complained that this email has been taken “out of context.” In this case, I’m not sure that it’s in their interests that this email be placed in context because the context leads right back to the role of IPCC itself in “hiding the decline” in the Briffa reconstruction.”

In the area of data, I am more concerned about the coordinated effort to manipulate instrumental data (that was appended onto the proxy data truncated in 1960 when the trees showed a decline - the so called “divergence problem") to produce an exaggerated warming that would point to man’s influence. I will be the first to admit that man does have some climate effect - but the effect is localized. Up to half the warming since 1900 is due to land use changes and urbanization, confirmed most recently by Georgia Tech’s Brian Stone (2009), Anthony Watts (2009), Roger Pielke Sr., and many others. The rest of the warming is also man-made - but the men are at the CRU, at NOAA’s NCDC, and NASAs GISS, the grant-fed universities and computer labs.

Programmer Ian “Harry” Harris, in the Harry_Read_Me.txt file, commented about:

“[The] hopeless state of their (CRU) data base. No uniform data integrity, it’s just a catalogue of issues that continues to grow as they’re found...I am very sorry to report that the rest of the databases seem to be in nearly as poor a state as Australia was. There are hundreds if not thousands of pairs of dummy stations, one with no WMO and one with, usually overlapping and with the same station name and very similar coordinates. I know it could be old and new stations, but why such large overlaps if that’s the case? Aarrggghhh! There truly is no end in sight.

This whole project is SUCH A MESS. No wonder I needed therapy!!

I am seriously close to giving up, again. The history of this is so complex that I can’t get far enough into it before by head hurts and I have to stop. Each parameter has a tortuous history of manual and semi-automated interventions that I simply cannot just go back to early versions and run the updateprog. I could be throwing away all kinds of corrections - to lat/lons, to WMOs (yes!), and more. So what the hell can I do about all these duplicate stations?

Climategate has sparked a flurry of examinations of the global data sets - not only at CRU, but in nations worldwide and at the global data centers at NOAA and NASA. Though the Hadley Centre implied their data was in agreement with other data sets and thus trustworthy, the truth is other data centers are complicit in the data manipulation fraud.

....

When you hear press releases from NOAA, NASA, or Hadley claiming a month, year, or decade ranks among the warmest ever recorded, keep in mind: they have tortured the data, and it has confessed. See much more in the rest of this 3 page post here. See Roger Pielke Sr’s much appreciated comments on this post here.

The Litte Ice Age Thermometers - The study Of Climate Variability from 1600 to 2009

By Climate Reason

A clickable map with the historical station records can be found here. A discusssion of UHI factor here.

----------------------------

The Smoking Gun At Darwin Zero

By Willis Eschenbach at Watts Up With That here

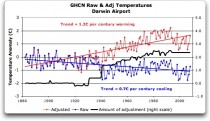

So I’m still on my multi-year quest to understand the climate data. You never know where this data chase will lead. This time, it has ended me up in Australia. NASA [GHCN] only presents 3 stations covering the period 1897-1992. What kind of data is the IPCC Australia diagram based on? If any trend it is a slight cooling. However, if a shorter period (1949-2005) is used, the temperature has increased substantially. The Australians have many stations and have published more detailed maps of changes and trends.

The folks at CRU told Wibjorn that he was just plain wrong. Here’s what they said is right, the record that Wibjorn was talking about, Fig. 9.12 in the UN IPCC Fourth Assessment Report, showing Northern Australia (below, enlarged here).

Here’s every station in the UN IPCC specified region which contains temperature records that extend up to the year 2000 no matter when they started, which is 30 stations (below, enlarged here).

Still no similarity with IPCC. So I looked at every station in the area. That’s 222 stations. Here’s that result (below, enlarged here).

The answer is, these graphs all use the raw GHCN data. But the IPCC uses the “adjusted” data. GHCN adjusts the data to remove what it calls “inhomogeneities”. So on a whim I thought I’d take a look at the first station on the list, Darwin Airport, so I could see what an inhomogeneity might look like when it was at home.

Then I went to look at what happens when the GHCN removes the “in-homogeneities” to “adjust” the data. Of the five raw datasets, the GHCN discards two, likely because they are short and duplicate existing longer records. The three remaining records are first “homogenized” and then averaged to give the “GHCN Adjusted” temperature record for Darwin.

To my great surprise, here’s what I found. To explain the full effect, I am showing this with both datasets starting at the same point (rather than ending at the same point as they are often shown) (below, enlarged here).

YIKES! Before getting homogenized, temperatures in Darwin were falling at 0.7 Celcius per century...but after the homogenization, they were warming at 1.2 Celcius per century. And the adjustment that they made was over two degrees per century...when those guys “adjust”, they don’t mess around.

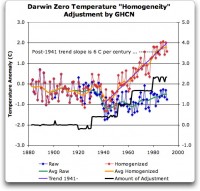

Intrigued by the curious shape of the average of the homogenized Darwin records, I then went to see how they had homogenized each of the individual station records. What made up that strange average shown in Fig. 7? I started at zero with the earliest record. Here is Station Zero at Darwin, showing the raw and the homogenized versions (below, enlarged here).

Yikes again, double yikes! What on earth justifies that adjustment? How can they do that? We have five different records covering Darwin from 1941 on. They all agree almost exactly. Why adjust them at all? They’ve just added a huge artificial totally imaginary trend to the last half of the raw data! Now it looks like the IPCC diagram in Figure 1, all right...but a six degree per century trend? And in the shape of a regular stepped pyramid climbing to heaven? What’s up with that? See full DAMNING post here.

---------------------------

Feeling Warmer Yet?

Study by New Zealand Climate Science Coalition

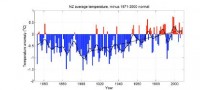

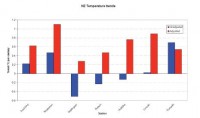

There have been strident claims that New Zealand is warming. The Inter-governmental Panel on Climate Change (IPCC), among other organisations and scientists, allege that, along with the rest of the world, we have been heating up for over 100 years.

But now, a simple check of publicly-available information proves these claims wrong. In fact, New Zealand’s temperature has been remarkably stable for a century and a half. So what’s going on?

New Zealand’s National Institute of Water & Atmospheric Research (NIWA) is responsible for New Zealand’s National Climate Database. This database, available online, holds all New Zealand’s climate data, including temperature readings, since the 1850s. Anybody can go and get the data for free. That’s what we did, and we made our own graph. Before we see that, let’s look at the official temperature record. This is NIWA’s graph of temperatures covering the last 156 years: From NIWA’s web site -

The official version enlarged here.Mean annual temperature over New Zealand, from 1853 to 2008 inclusive, based on between 2 (from 1853) and 7 (from 1908) long-term station records. The blue and red bars show annual differences from the 1971 - 2000 average, the solid black line is a smoothed time series, and the dotted [straight] line is the linear trend over 1909 to 2008 (0.92C/100 years).

This graph is the centrepiece of NIWA’s temperature claims. It contributes to global temperature statistics and the IPCC reports. It is partly why our government is insisting on introducing an ETS scheme and participating in the climate conference in Copenhagen. But it’s an illusion.

Dr Jim Salinger (who no longer works for NIWA) started this graph in the 1980s when he was at CRU (Climate Research Unit at the University of East Anglia, UK) and it has been updated with the most recent data. It’s published on NIWA’s website and in their climate-related publications.

The actual thermometer readings

To get the original New Zealand temperature readings, you register on NIWA’s web site, download what you want and make your own graph. We did that, but the result looked nothing like the official graph. Instead, we were surprised to get this:

here.

Straight away you can see there’s no slope - either up or down. The temperatures are remarkably constant way back to the 1850s. Of course, the temperature still varies from year to year, but the trend stays level - statistically insignificant at 0.06C per century since 1850. Putting these two graphs side by side, you can see huge differences. What is going on?

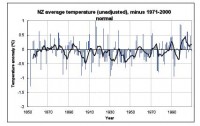

Why does NIWA’s graph show strong warming, but graphing their own raw data looks completely different? Their graph shows warming, but the actual temperature readings show none whatsoever! Have the readings in the official NIWA graph been adjusted?

It is relatively easy to find out. We compared raw data for each station (from NIWA’s web site) with the adjusted official data, which we obtained from one of Dr Salinger’s colleagues. Requests for this information from Dr Salinger himself over the years, by different scientists, have long gone unanswered, but now we might discover the truth.

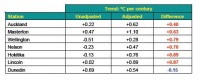

Proof of man-made warming? What did we find? First, the station histories are unremarkable. There are no reasons for any large corrections. But we were astonished to find that strong adjustments have indeed been made. About half the adjustments actually created a warming trend where none existed; the other half greatly exaggerated existing warming. All the adjustments increased or even created a warming trend, with only one (Dunedin) going the other way and slightly reducing the original trend.

Enlarged here

The shocking truth is that the oldest readings have been cranked way down and later readings artificially lifted to give a false impression of warming, as documented below. There is nothing in the station histories to warrant these adjustments and to date Dr Salinger and NIWA have not revealed why they did this.

Enlarged here

See much more of this detailed analysis here. NIWA responds to the charges here but Anthony Watts uses instrument photo located at NIWA headquarters to cast doubt on their claims here. See also how the government is hell bent on moving foward with carbon emission schemes choosing to believe agenda driven government scientists here.

May I suggest those of you capable of extracting and doing the same kind of analysis for other regions try the same approach. Note back in 2007, an Icecap post inspired Steve McIntyre story “Central Park: Will the real Slim Shady please stand up?” on Central Park data raw versus adjusted here. Read the comments. As one poster noted could this be a ‘smoking gun’ on data manipulation”.

------------------------

No Global Warming in 351 Year British Temperature Record

By the Carbon Sense Coalition here

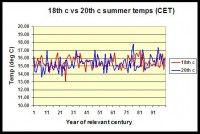

The Central England Temperature (CET) record, starting in 1659 and maintained by the UK Met Office, is the longest unbroken temperature record in the world. Temperature data is averaged for a number of weather stations regarded as being representative of Central England rather than measuring temperature at one arbitrary geographical point identified as the centre of England.

A Scottish Chemist, Wilson Flood, has collected and analysed the 351 year CET record. Here (below, enlarged here) is the comparison of the 18th Century with the 20th Century:

Wilson Flood comments:

“Summers in the second half of the 20th century were warmer than those in the first half and it could be argued that this was a global warming signal. However, the average CET summer temperature in the 18th century was 15.46 degC while that for the 20th century was 15.35 degC. Far from being warmer due to assumed global warming, comparison of actual temperature data shows that UK summers in the 20th century were cooler than those of two centuries previously.”

-------------------------

Karlen Emails about Inability to Replicate IPCC CRU based Nordic Data

By Willis Eschenbach on WUWT

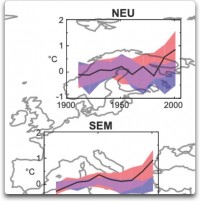

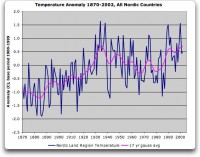

Professor Karlen in attempts to reconstruct the Nordic temperature. In his analysis, I find an increase from the early 1900s to ca 1935, a trend down until the mid 1970s and so another increase to about the same temperature level as in the late 1930s (below, enlarged here).

A distinct warming to a temperature about 0.5 deg C above the level 1940 is reported in the IPCC diagrams. I have been searching for this recent increase, which is very important for the discussion about a possible human influence on climate, but I have basically failed to find an increase above the late 1930s (below, enlarged here).

See much more here.

-------------------------

Skewed science

By Phil Green, Financial Post

A French scientist’s temperature data show results different from the official climate science. Why was he stonewalled? Climate Research Unit emails detail efforts to deny access to global temperature data.

The global average temperature is calculated by climatologists at the Climatic Research Unit (CRU) at the University of East Anglia. The temperature graph the CRU produces from its monthly averages is the main indicator of global temperature change used by the International Panel on Climate Change, and it shows a steady increase in global lower atmospheric temperature over the 20th century. Similar graphs for regions of the world, such as Europe and North America, show the same trend. This is consistent with increasing industrialization, growing use of fossil fuels, and rising atmospheric concentrations of carbon dioxide.

It took the CRU workers decades to assemble millions of temperature measurements from around the globe. The earliest measurements they gathered came from the mid 19th century, when mariners threw buckets over the side of their square riggers and hauled them up to measure water temperature. Meteorologists increasingly started recording regular temperature on land around the same time. Today they collect measurements electronically from national meteorological services and ocean-going ships.

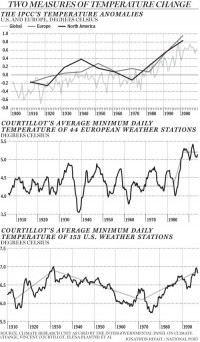

Millions of measurements, global coverage, consistently rising temperatures, case closed: The Earth is warming. Except for one problem. CRU’s average temperature data doesn’t jibe with that of Vincent Courtillot, a French geo-magneticist, director of the Institut de Physique du Globe in Paris, and a former scientific advisor to the French Cabinet. Last year he and three colleagues plotted an average temperature chart for Europe that shows a surprisingly different trend. Aside from a very cold spell in 1940, temperatures were flat for most of the 20th century, showing no warming while fossil fuel use grew. Then in 1987 they shot up by about 1 C and have not shown any warming since (below, enlarged here). This pattern cannot be explained by rising carbon dioxide concentrations, unless some critical threshold was reached in 1987; nor can it be explained by climate models.

Courtillot and Jean-Louis Le Mouel, a French geo-magneticist, and three Russian colleagues first came into climate research as outsiders four years ago. The Earth’s magnetic field responds to changes in solar output, so geomagnetic measurements are good indicators of solar activity. They thought it would be interesting to compare solar activity with climatic temperature measurements.

Their first step was to assemble a database of temperature measurements and plot temperature charts. To do that, they needed raw temperature measurements that had not been averaged or adjusted in any way. Courtillot asked Phil Jones, the scientist who runs the CRU database, for his raw data, telling him (according to one of the ‘Climategate’ emails that surfaced following the recent hacking of CRU’s computer systems) “there may be some quite important information in the daily values which is likely lost on monthly averaging.” Jones refused Courtillot’s request for data, saying that CRU had “signed agreements with national meteorological services saying they would not pass the raw data onto third parties.” (Interestingly, in another of the CRU emails, Jones said something very different: “I took a decision not to release our [meteorological] station data, mainly because of McIntyre,” referring to Canadian Steve McIntyre, who helped uncover the flaws in the hockey stick graph.)

Courtillot and his colleagues were forced to turn to other sources of temperature measurements. They found 44 European weather stations that had long series of daily minimum temperatures that covered most of the 20th century, with few or no gaps. They removed annual seasonal trends for each series with a three-year running average of daily minimum temperatures. Finally they averaged all the European series for each day of the 20th century.

CRU, in contrast, calculates average temperatures by month - rather than daily - over individual grid boxes on the Earth’s surface that are 5 degrees of latitude by 5 degrees of longitude, from 1850 to the present. First it makes hundreds of adjustments to the raw data, which sometimes require educated guesses, to try to correct for such things as changes in the type and location of thermometers. It also combines air temperatures and water temperatures from the sea. It uses fancy statistical techniques to fill in gaps of missing data in grid boxes with few or no temperature measurements. CRU then adjusts the averages to show changes in temperature since 1961-1990.

CRU calls the 1961-1990 the “normal” period and the average temperature of this period it calls the “normal.” It subtracts the normal from each monthly average and calls these the monthly “anomalies.” A positive anomaly means a temperature was warmer than CRU’s normal period. Finally CRU averages the grid box anomalies over regions such as Europe or over the entire surface of the globe for each month to get the European or global monthly average anomaly. You see the result in the IPCC graph nearby, which shows rising temperatures.

The decision to consider the 1961-1990 period as ‘normal’ was CRUs. Had CRU chosen a different period under consideration, the IPCC graph would have shown less warming, as discussed in one of the Climategate emails, from David Parker of the UK meteorological office. In it, Parker advised Jones not to select a different period, saying “anomalies will seem less positive than before if we change to newer normals, so the impression of global warming will be muted.” That’s hardly a compelling scientific justification!

In addition to calculating temperature averages for Europe, Courtillot and his colleagues calculated temperature averages for the United States. Once again, their method yielded more refined averages that were not a close match with the coarser CRU temperature averages. The warmest period was in 1930, slightly above the temperatures at the end of the 20th century. This was followed by 30 years of cooling, then another 30 years of warming.

Courtillot’s calculations show the importance of making climate data freely available to all scientists to calculate global average temperature according to the best science. Phil Jones, in response to the email hacking, said that CRU’s global temperature series show the same results as “completely independent groups of scientists.” Yet CRU would not share its data with independent scientists such as Courtillot and McIntyre, and Courtillot’s series are clearly different. Read more here.

Icecap Note: Finally see this exhaustive study by E.M. Smith on Musings from the Chiefio on NOAA’s global garbage bin GHCN which CRU and the media are using as confirmation that poor Phil Jones and Hadley did not manipulate data. See how in China, the Dragon Ate the Thermometers in this analysis by E.M. Smith here.

Also this animated gif shows the manipulation of the US temperatures. H/T Smokey on WUWT

------------------------

Would You Like Your Temperature Data Homogenized, or Pasteurized?

By Basil Copeland on Watts Up With That

A Smoldering Gun From Nashville, TN

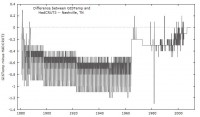

The hits just keep on coming. About the same time that Willis Eschenbach revealed “The Smoking Gun at Darwin Zero,” The UK’s Met Office released a “subset” of the HadCRUT3 data set used to monitor global temperatures. I grabbed a copy of “the subset” and then began looking for a location near me (I live in central Arkansas) that had a long and generally complete station record that I could compare to a “homogenized” set of data for the same station from the GISTemp data set. I quickly, and more or less randomly, decided to take a closer look at the data for Nashville, TN. In the HadCRUT3 subset, this is “72730” in the folder “72.” A direct link to the homogenized GISTemp data used is here. After transforming the row data to column data (see the end of the post for a “bleg” about this), the first thing I did was plot the differences between the two series (below, enlarged here):

The GISTemp homogeneity adjustment looks a little hockey-stickish, and induces an upward trend by reducing older historical temperatures more than recent historical temperatures. This has the effect of turning what is a negative trend in the HadCRUT3 data into a positive trend in the GISTemp version (below, enlarged here):

So what would appear to be a general cooling trend over the past ~130 years at this location when using the unadjusted HadCRUT3 data, becomes a warming trend when the homogeneity adjustment is supplied.

“There is nothing to see here, move along.” I do not buy that. Whether or not the homogeneity adjustment is warranted, it has an effect that calls into question just how much the earth has in fact warmed over the past 120-150 years (the period covered, roughly, by GISTemp and HadCRUT3). There has to be a better, more “robust” way of measuring temperature trends, that is not so sensitive that it turns negative trends into positive trends (which we’ve seen it do twice how, first with Darwin Zero, and now here with Nashville). I believe there is.

Temperature Data: Pasteurized versus Homogenized

In a recent series of posts, here, here, and with Anthony here, I’ve been promoting a method of analyzing temperature data that reveals the full range of natural climate variability. Metaphorically, this strikes me as trying to make a case for “pasteurizing” the data, rather than “homogenizing” it. In homogenization, the object is to “mix things up” so that it is “the same throughout.” When milk is homogenized, this prevents the cream from rising to the top, thus preventing us from seeing the “natural variability” that is in milk. But with temperature data, I want very much to see the natural variability in the data. And I cannot see that with linear trends fitted through homogenized data. It may be a hokey analogy, but I want my data pasteurized - as clean as it can be - but not homogenized so that I cannot see the true and full range of natural climate variability. See full post here.

See this post on GISS Raw Station Data Before and After Homogenization for an eye opening view into blatant data manipulation and truncation.

By Chip Knappenberger in SPPI

In science, as in most disciplines, the process is as important as the product. The recent email/data release (aka Climategate) has exposed the process of scientific peer-review as failing. If the process is failing, it is reasonable to wonder what this implies about the product.

Several scientists have come forward to express their view on what light Climategate has shed on these issues. Judith Curry has some insightful views here and here, along with associated comments and replies. Roger Pielke Jr. has an opinion, as no doubt do many others. Certainly a perfect process does not guarantee perfect results, and a flawed process does not guarantee flawed results, but the chances of a good result are much greater with the former than the latter. That’s why the process was developed in the first place.

Briefly, the peer-review process is this; before results are published in the scientific literature and documented for posterity, they are reviewed by one or more scientists who have some working knowledge of the topic but who are not directly associated with the work under consideration. The reviewers are typically anonymous and basically read the paper to determine if it generally seems like a reasonable addition to the scientific knowledge base, and that the results seem reproducible given the described data and methodology.

Generally, reviewers do not “audit” the results - that is, spend a lot of effort untangling the details of the data and or methodologies to see if they are appropriate, or to try to reproduce the results for themselves. How much time and effort is put into a peer review varies greatly from case to case and reviewer to reviewer. On most occasions, the reviewers try to include constructive criticism that will help the authors improve their work - that is, the reviewers serve as another set of eyes and minds to look over and consider the research, eyes that are more removed from the research than the co-authors and can perhaps offer different insights and suggestions.

Science most often moves forwards in small increments (with a few notable exceptions) and the peer-review process is designed to keep it moving efficiently, with as little back-sliding or veering off course as possible. It is not a perfect system, nor, do I think, was it ever intended to be. The guys over at RealClimate like to call peer-review a “necessary but not sufficient condition.”

Certainly is it not sufficient. But increasingly, there are indications that its necessity is slipping - and the contents of the released Climategate emails are hastening that slide. Personally, I am not applauding this decline. I think that the scientific literature (as populated through peer-review) provides an unparalleled documentation of the advance of science and that it should not be abandoned lightly. Thus, I am distressed by the general picture of a broken system that is portrayed in the Climategate emails.

Certainly there are improvements that could make the current peer-review system better, but many of these would be difficult to impose on a purely voluntary system. Full audits of the research would make for better published results, but such a requirement is too burdensome on the reviewers, who generally are involved in their own research (among other activities) and would frown upon having to spend a lot of time to delve too deeply into the nitty-gritty details of someone else’s research topic.

An easier improvement to implement would be a double-blind review process in which both the reviewers and the authors were unknown to each other. A few journals incorporate this double-blind review process, but the large majority does not. I am not sure why not. Such a process would go at least part of the way to avoiding pre-existing biases against some authors by some reviewers. Another way around this would be to have a fully open review process, in which the reviewers and author responses were freely available and open for all to see, and perhaps contribute. A few journals in fact have instituted this type of system, but not the majority.

Nature magazine a few years ago hosted a web debate on the state of scientific peer-review and possible ways of improving it. It is worth looking at to see the wide range of views and reviews assembled there. As it now stands, a bias can exist in the current system. That it does exist is evident in the Climategate emails. By all appearances, it seems that some scientists are interested in keeping certain research (and particular researchers) out of the peer-review literature (and national and international assessments derived there from). While undoubtedly these scientists feel that they are acting in the best interest of science by trying to prevent too much backsliding and thereby keeping things moving forward efficiently, the way that they are apparently going about it is far from acceptable.

Instead of improving the process, it has nearly destroyed it. If the practitioners of peer-review begin to act like members of an exclusive club controlling who and what gets published, the risk is run that the true course of science gets sidetracked. Even folks with the best intentions can be wrong. Having the process too tightly controlled can end up setting things back much further than a more loosely controlled process which is better at being self-correcting.

Certainly as a scientist, you want to see your particular branch of science move forward as quickly as possible, but pushing it forward, rather than letting it move on its own accord, can oftentimes prove embarrassing. As it was meant to be, peer-review is a necessary, but not sufficient condition. As it has become, however, the necessity has been eroded. And blogs have arisen to fill this need. In my opinion, blogs should serve as discussion places where ideas get worked out. The final results of which, should then be submitted to the peer-reviewed literature. To me, blogs are a 21st-century post-seminar beer outing, lunch discussion, or maybe even scientific conference. But they should not be an alternative to the scientific literature - a permanent documentation of the development of scientific ideas.

But, the rise of blogs as repositories of scientific knowledge will continue if the scientific literature becomes guarded and exclusive. I can only anticipate this as throwing the state of science and the quest for scientific understanding into disarray as we struggle to figure out how to incorporate blog content into the tested scientific knowledgebase. This seems a messy endeavor. Instead, I think that the current peer-review system either needs to be re-established or redefined. The single-blind review system seems to be an outdated one. With today’s technology, a totally open process seems preferable and superior - as long as it can be constrained within reason. At the very least, double-blind reviews should be the default. Maybe even some type of an audit system could be considered by some journals or some organizations.

Perhaps some good will yet come out of this whole Climategate mess - a fairer system for the consideration of scientific contribution, one that could less easily be manipulated by a small group of influential, but perhaps misguided, individuals. We can only hope. See PDF.

Confirmation of the Dependence of the ERA-40 Reanalysis Data on the Warm Bias in the CRU Data

By Roger Pielke Sr, Climate Science Blog

There is a remarkable admission in the leaked e-mails from Phil Jones of the dependence of the long term surface temperatures trends in the ERA-40 reanalysis on the surface temperature data from CRU. This is a very important issue as ERA-40 is used as one metric to assess multi-decadal global surface temperature trends, and has been claimed as an independent assessment tool from the surface temperature data. The report ECMWF Newsletter No. 115 - Spring 2008 overviews the role of ERA-40 in climate change studies.

The paper by Eugenia (Kalnay) that is presumably being referred to in the Phil Jones e-mails, which I have presented later in this post, is There is a remarkable admission in the leaked e-mails from Phil Jones of the dependence of the long term surface temperatures trends in the ERA-40 reanalysis on the surface temperature data from CRU.

This is a very important issue as ERA-40 is used as one metric to assess multi-decadal global surface temperature trends, and has been claimed as an independent assessment tool from the surface temperature data. The report ECMWF Newsletter No. 115 - Spring 2008 overviews the role of ERA-40 in climate change studies.

The paper by Eugenia (Kalnay) that is presumably being referred to in the Phil Jones e-mails, which I have presented later in this post, is Kalnay, E., and M. Cai, 2003: Impact of urbanization and land-use on climate change. Nature, 423, 528-531.

There are a number of subsequent papers that have built on the ‘observation minus reanalysis’ (OMR) method analysis methodology introduced by Eugenia including

Kalnay, E., M. Cai, H. Li, and J. Tobin, 2006: Estimation of the impact of land-surface forcings on temperature trends in eastern Unites States. J. Geophys. Res., 111, D06106,doi:10.1029/2005JD006555.

Lim, Y.-K., M. Cai, E. Kalnay, and L. Zhou, 2005: Observational evidence of sensitivity of surface climate changes to land types and urbanization. Geophys. Res. Lett., 32, L22712, doi:10.1029/2005GL024267.

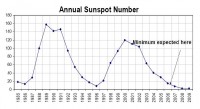

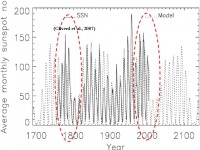

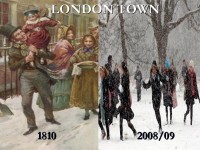

Nunez, Mario N., H. H. Ciapessoni, A. Rolla, E. Kalnay, and M. Cai, 2008: Impact of land use and precipitation changes on surface temperature trends in Argentina. J. Geophys. Res. - Atmos., 113, D06111, doi:10.1029/2007JD008638, March 29, 2008